Quatra™

Transform from proof of concept to Enterprise AI

AI APPS

QUATRA CLIENT

DATA

INTEGRATION

Connect, stream, and process data from over 100 sources with ease. The platform supports both data-in-motion and data-at-rest use cases, providing a unified and real-time data environment. Support for change data capture, event sourcing, and other patterns available. Choose from hundreds of simple data transformations or build your own in Java.

PREPARATION

VISUALIZATION

DATA API

Access your data with unparalleled ease. The REST Data API simplifies data retrieval, allowing your applications to seamlessly pull information. The API comes with detailed documentation and examples, making implementation a breeze.

GOVERNANCE

Learn more about the security framework.

AI

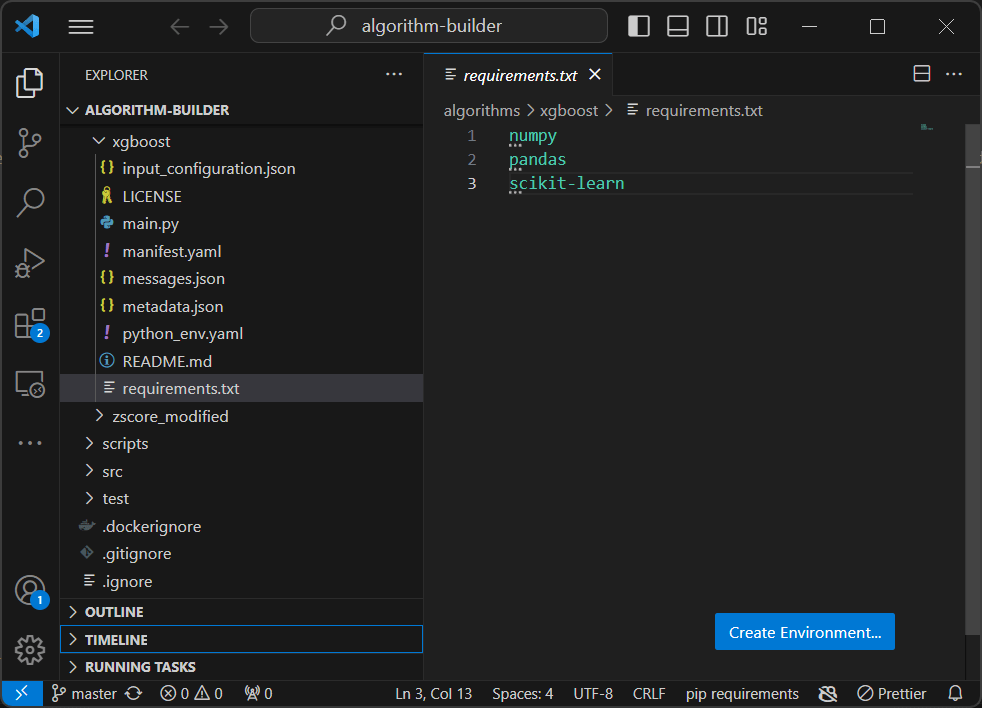

MODEL DEPLOYMENT

Choose from pre-deployed models or deploy your own models. The platform makes it easy to transition from R&D to production where continuous automation of the AI lifecycle is provided.

ENSEMBLE SERVING

Harness the collective intelligence of ensembles and empower decision-makers. Sophisticated multi-model ensemble serving provides concurrent execution of diverse models on a distributed cluster, culminating in a harmonious symphony of insights.

Concurrent Model Execution: Ensemble Serving allows concurrent execution of multiple models within a group, leveraging the power of parallel processing on a distributed cluster. Boost throughput, minimize latency, and handle diverse tasks with ease.

Distributed Cluster Architecture: Embrace scalability and performance. The platform’s distributed cluster architecture ensures that your ensemble of models can scale effortlessly to meet the demands of real-world applications, from edge computing to cloud deployments.

Customizable Final Computation Algorithms: Ensemble Serving processes results through a final computation algorithm, synthesizing the outputs of each model to generate a comprehensive inference. Users have the freedom to utilize platform-provided final computation algorithms or deploy custom ones in Python. This flexibility allows you to adapt the decision-making process to specific use cases.

Declarative Model Groups: Model groups are defined through declarative configuration included during model deployment which provides a cohesive environment for serving, executing, and orchestrating the collaborative efforts of multiple models. Empower your team to experiment, iterate, and customize decision processes for maximum impact.

MLOPS

The platform is designed to empower organizations by seamlessly automating the entire data science lifecycle.

Continuous Profiling & Feature Engineering: Stay ahead with continuous schema inference, profiling, and feature engineering during stream processing. Adapt to evolving data patterns effortlessly.

Intelligent Algorithm Lifecycle Management: Let our platform handle algorithm selection, training, testing, and deployment. Identify optimal algorithms, ensure accuracy meets customizable thresholds, and store them in a centralized model repository.

Ensemble Execution & Monitoring: Streamline complex workflows with ensemble grouping. Execute algorithms for every data record streamed, providing comprehensive results. Monitor and fine-tune ensembles for peak performance.

Efficient Metric Calculation & Storage: Drive data-driven decisions with aggregated and calculated metrics. Our platform stores metrics in performant fact tables, enabling swift analytical queries for actionable insights.

Continuous Learning with Feedback Harvesting: Adapt and evolve with user feedback. Capture user input as labels, enabling the platform to learn and optimize. Embrace a dynamic environment where continuous improvement is the norm.

RESPONSIBLE AI

CORE SERVICES

ARCHITECTURE

Unleash Innovation with a Cloud-Native, Elastic, and Stream-First Architecture. Your AI should be a catalyst for innovation and transformation.

Cloud-Native Prowess with On-Premises Option: Our architecture is born in the cloud, leveraging its native services for seamless integration, optimal performance, and efficient resource utilization. On-premises and hybrid deployments supported.

Cloud Agnostic for Freedom of Choice: Break free from vendor lock-in. Our architecture is designed to be cloud-agnostic, allowing you the freedom to choose the cloud provider that aligns with your needs.

Elastic Scalability: Scale effortlessly to meet your evolving demands. Our architecture’s elastic scalability ensures that resources can adjust to workload fluctuations.

Microservices for Decentralized Efficiency: Embrace a modular and decentralized approach. Our microservices architecture promotes agility and ease of maintenance. Independently deploy, scale, and update components, fostering efficiency and minimizing disruptions across the system.

Stream-First Data Architecture: Process data as it flows. Stay ahead of the curve with real-time insights, allowing your applications to make informed decisions in the moment.

Open and Modular Flexibility: Adapt to your unique needs. Our architecture is open and modular, accommodating existing software investments seamlessly. Integrate third-party technologies effortlessly across the AI automation lifecycle, ensuring compatibility and flexibility in your technology stack.

Developer Empowerment: Provide developers with choices. Our architecture allows developers the flexibility to leverage native services or integrate third-party technologies seamlessly. Empower your development teams to choose the tools that best suit their expertise and the specific requirements of your projects.

SECURITY

Role-based Access Controls: The security model empowers administrators to define granular permissions, specifying what actions are allowed or denied on specific resources. For example, a role may allow creation of secured sharable links to a stream with a specified unique identifier.

Organization Membership: Streamline access management across organizations. Our platform allows user accounts to be associated with one or more organizations, enabling administrators to efficiently manage access permissions based on organizational affiliations.

Cluster-Centric Resource Management: Keep resources secure within clusters. Our security model revolves around clusters, ensuring that resources are housed and protected within designated environments. Maintain control over data processing services and storage, safeguarding the heart of your digital operations.

Multi-Cluster Flexibility: Embrace organizational diversity. One cluster can serve multiple organizations, offering flexibility in resource utilization. Each organization, in turn, can leverage one or more clusters, providing a scalable and adaptable structure that aligns with your business dynamics.

ADMINISTRATION

Kubernetes-Based Control Plane: Harness the power of Kubernetes, the industry-standard orchestrator for containerized applications. The Kubernetes-based control plane serves as the nerve center, orchestrating, and managing workloads with unparalleled efficiency.

Flexible Workload Configurations: The platform supports flexible workload configurations, allowing administrators to customize settings, resource allocations, and deployment strategies to match the unique needs of your organization.

Auto-Scaling Capabilities: Embrace dynamic scalability with Kubernetes auto-scaling capabilities that adapt to fluctuating workloads, ensuring that your resources scale seamlessly in response to demand, optimizing performance and resource utilization.

Simplified Monitoring: Kubernetes simplifies monitoring with robust tools and integrations, providing administrators with comprehensive visibility into the health and performance of the platform. Identify and address issues proactively for a resilient and reliable system.

Maintenance Simplified: From rolling updates to zero-downtime deployments, Kubernetes ensures that maintenance tasks are executed seamlessly, minimizing disruptions and ensuring continuous availability.

Optimized Operations Activities: Simplify day-to-day operations with Kubernetes’ declarative configuration and automation capabilities. Administrators can focus on strategic tasks, knowing that routine operational activities are handled efficiently by the platform.

DEPLOYMENT

Flexible deployment configurations include hybrid, on-premise, private cloud, multi-tenant public cloud, AWS, Azure, Google Cloud Platform, and more. The platform is cloud-agnostic and designed to support centralized or decentralized deployments, it empowers you to choose where and how your solution operates. Deployment environments may be customer managed or managed by us.